Digital technologies are profoundly changing the world of music. Many skills have become obsolete and new talents are emerging. But what will the role of the artist be in the future?

by Gadi Sassoon

From the 90s to today, the digital revolution has upset the very foundations of the music business: distribution and sale of phonographic supports have been replaced by streaming services; in parallel, musicians have embraced social media to interact directly with fans. These changes are evident to anyone who consumes music in recent years.

However, there is a less obvious side to this transformation: the digitization of musical production and composition. Recording studios with million-dollar equipment have been replaced by programs for laptops, tablets and smartphones, putting in the hands of millions of people once inaccessible means; in the professional arena, even orchestras have to compete with software that allows the composer-programmer to credibly create entire symphonies.

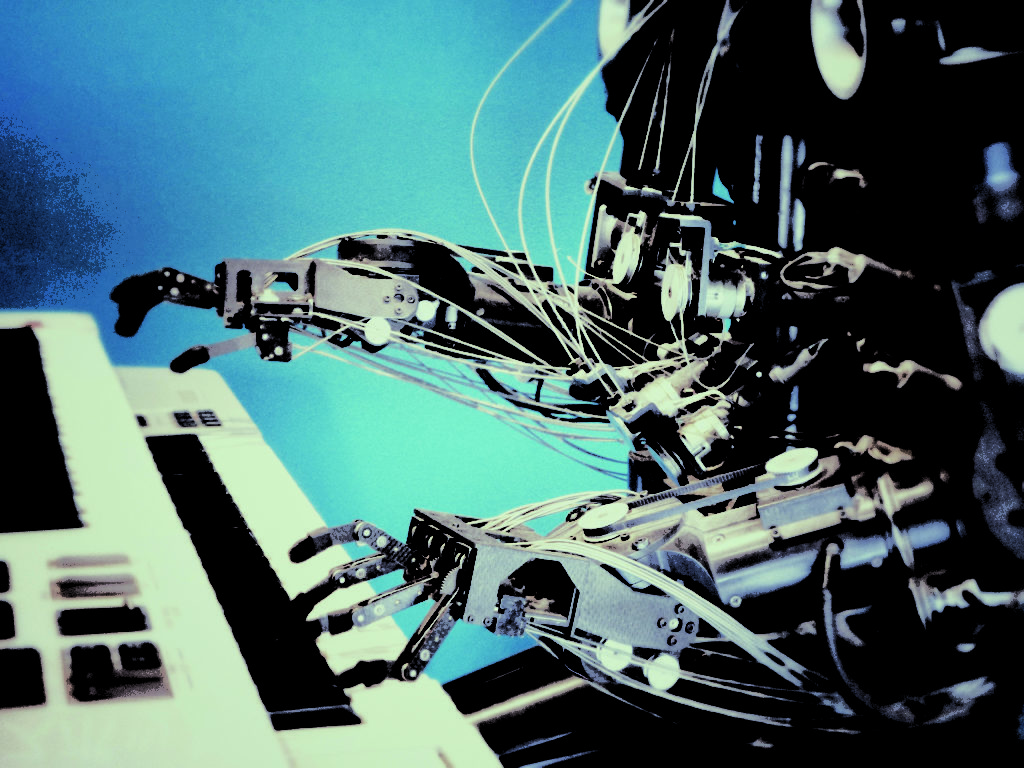

This aspect of the digitization of music has profoundly changed the internal structure of the industry, making many skills obsolete and bringing out new forms of talent. Today, with the advent of artificial intelligence, we must prepare for the next stage of our evolution.

In a context in which artistic production is already in fact computerized, machine learning is also effective in areas hitherto linked to exclusively human skills: it is the beginning of a phenomenon that we could call automation of creativity. In addition to demonstrating music applications of powerful generalist AI such as IBM Watson, we are already seeing the commercial use of specialized AI that can do very sophisticated things - compose, finalize a mix, invent a base - faster and at a fraction of the cost of a counterpart. human, with surprising results.

Some services eliminate the intervention of a human composer to create music: Aiva (Artificial Intelligence Virtual Artist) is a company whose AI composes "emotional soundtracks" through a web portal; Amper Music offers “intuitive tools for non-musicians” that thanks to their musical AI “quickly generate music in the style, duration and structure” the client desires - eliminating the copyright problem. Other companies apply machine learning to audio engineering: Izotope provides professional and amateur sound engineers with AI tools to produce and analyze a song while Landr offers an online mastering service (the hyper-specialized work of “playing” a song to go on the radio, on TV, on a record) based on machine learning.

Meanwhile, a new category of "music hacker" is exploring more adventurous frontiers: independent researcher CJ Carr has discovered for example that by modifying machine learning algorithms Lyrebird, invented to create hyper-realistic artificial voices, can create neural networks capable of producing extremely specific musical styles. Artificial intelligence was born Dadabot, which creates entire albums on its own. British musician Reeps One, the collaboration with Nokia Bell Labs, used Dadabot for a duet with a virtual copy of himself capable of formulating original musical ideas. And these are just a few examples of an ever-growing field, in which we already hear about “virtual beings”.

The question that we as composers, artists, producers must ask ourselves is: how will we respond to the automation of that creativity on which our professional figures depend? If we rely on established formulas, it will be more and more likely that an intelligent machine will replace us, or at least render obsolete capabilities that we have laboriously built.

The challenge will be to evolve in two parallel directions: on the one hand, to become stylistically unique and exceptionally competent, on the other hand to understand how to use these new tools to enhance our creativity and explore new frontiers. Perhaps the future role of the human artist will be to focus on emotions, leaving the production work to the machines. But the answer to these questions is not obvious.

When it comes to AI driven disruption, the same examples are often encountered in debates: self-driving trucks, deliveries with drones, automation of manual work. But the automation of creativity is already here, and those who know how to see beyond the code will reap the rewards of the next revolution.

To paraphrase Reeps One, “To see further, we will have to be like dwarves on the shoulders of robots”.

Gadi Sassoon is a composer and producer. It operates between Milan, London and Los Angeles. His work appears on Warner, Ninja Tune, NBC Universal, BBC, HBO, Fox, AMC, Disney and many more (gadi.sassoon@gmail.com).