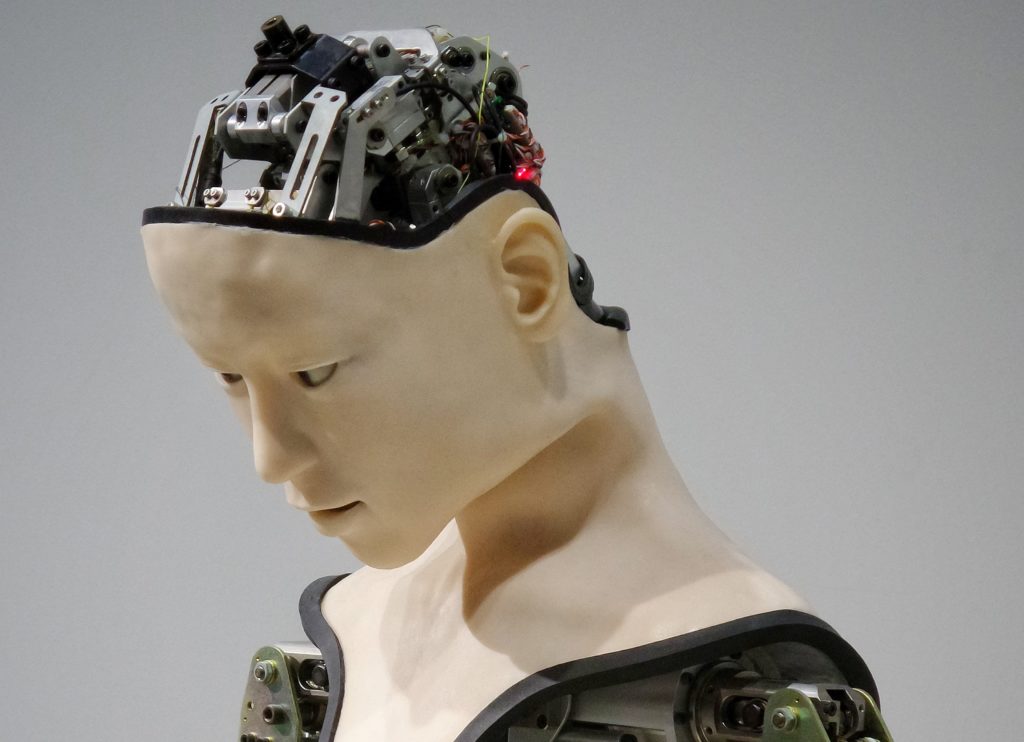

Technology, even in its most advanced forms such as robotics and theartificial intelligence (AI), is increasingly part of our daily realities, both in the world of work and within our home walls. Occasionally it raises some concerns, but all in all we live well enough. We had some time to get used to, probably also thanks to the fact that for a long time now AI has unleashed the fantasy of science fiction writers who, with surprising divinatory ability, have imagined different scenarios, some positive and happy and others dystopian and nightmare . Let's see some.

In the beautiful book by Daniel H. Wilson, Robocalypse, a computer expert inadvertently frees a sentient AI entity named Archos R-14 who immediately intends to gleefully kill all of humanity. The first actions are successful, also because they are well concealed, so all personal devices, driverless cars, computerized guides of planes, houses managed by home automation, lifts, financial programs, health systems and more are infected he has more. The predictable chain disasters follow until, after a lot of coming and going, Archos is neutralized and the good guys win (except that the AI secretly manages to send a message to unknown alien machines in extremis). It is a decidedly disturbing and fascinating plot, and it represents a little the nightmare-paradigm that can be applied to our fantasies about artificial intelligence.

In fact, many wonder if, with the progressive advance of AI, we really know what we are doing. Non-trivial question, if serious people like central bankers from around the world - from Draghi (ECB) to Bernanke (Fed) to another half-dozen colleagues - had already felt three years ago the need to come together to discuss this issue below the guidance of experts such as David Autor, Joel Mokyr and other economics and technology luminaries. Discussion triggered by the essay by Autor himself (with Anna Salomons) entitled "Does Productivity Growth Threaten Employment?" (European Central Bank, 2017).

The apocalypse of the central banker (who is known to have little imagination) concerns serious issues such as employment, productivity and the like. But the central point remains the question asked above. That is, to what extent to make AI evolve, give it autonomy, become dependent on it and, why not, potential victims.

We had already seen the first examples with the Hal computer, of 2001 A space odyssey (by the way, how many know that the name HAL derives from the acronym IBM, moving the three letters forward by one?). But also with the androids described by Philip K. Dick, whose beautiful book entitled Do Androids Dream of Electric Sheep? created the movies in the series Blade Runner, which perhaps we have not taken seriously enough; and then in the distant future of the films dedicated to Terminator.

To quote repeatedly, in the context of these new and formidable technologies, science fiction films and books may seem inappropriate, but it is not. Visionary writers have anticipated what we observe now for decades: for example, Jules Verne is HG Wells around the end of the nineteenth century they had already foreshadowed the space travel that we take today as completely taken for granted.

An AI super expert, Oren Etzioni (CEO of the Allen Institute for Artificial Intelligence) recently called up The New York Times the "three laws of robotics" set out by Asimov in 1942 as a good basis for regulating our future relationships with AI and robots. And if Bill Gates hypothesized the introduction of a tax on robots to finance the alternatives resulting from job losses, Tesla's visionary boss, Elon Musk, urged rulers of the world to regulate the introduction of artificial intelligence "Before it's too late".

Like him, some researchers who some time ago decided to end the idyll of Alice and Bob, two obviously not stupid computers who had invented their own language so as not to make themselves understood, in their evidently intimate interactions, by human programmers.

In short, as he correctly claimed in his books Luciano Floridi, a philosophy professor at Oxford, it is essential that the question of the relationship between humans and machines arises not so much to prevent the intelligence of these from overwhelming the intelligence of those, but to avoid that human stupidity is founded on the stupidity of robots. It is a logical theme, just as philosophical as ethical, but in the end terribly practical and concrete.

If we are led to believe that scientists, technologists, entrepreneurs, and politicians and the military are rational people, we must also hope that they will not delegate the choices about our safety and our lives to machines. But if we are, as is quite possible, inclined to doubt it, then we must set mutual rules for circulating driverless cars in the streets of our crowded cities, to allow financial robots to manage operations on the stock exchange and investments people, to authorize sophisticated software to do legal research, to entrust medical diagnostics to robotic assistants, or even just to have the lawn mowers cut by autonomous lawnmowers without threatening the paws of the house dog. And even more so, of course, to allow the creation and use of military robots in operation theaters.

And this is possible, resorting to increasingly sophisticated approaches to the development of artificial intelligence, whether they are based on bottom-up methodologies (such as the deep learning and the reinforced learning) or top-down (such as Bayesian systems centered on abstraction skills), which basically all have the aim of bringing the learning abilities of machines closer to those of man (read the essay by Alison Gopnik of Berkeley University, "Making AI More Human ", Scientific American, 2017).

After all, we have the time to reflect and act judiciously, since according to some, at least 20 years will be necessary to witness the triumph of AI and not less than 120 according to others. A period of time to be used to the best.

Enrico Sassoon

Director in charge of Harvard Business Review Italia.